Innovative facial recognition technology has long been criticised for potentially breaching our human rights. Now, new research has delivered a fresh blow to the innovative security product which was designed to catch criminals walking the streets.

Facial recognition technology, which maps facial features and body measurements to compare with biometric data on police databases and watchlists, is currently being trialed with the Metropolitan Police, South Wales Police and Leicestershire Police.

But academics from the University of East Anglia and Monash University, Australia, believe the software needs urgent regulation because it exists in a “legal vacuum”.

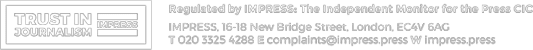

The academics claim the technology needs tighter regulation. Image credit: Flickr

Doctor Joe Purshouse of the UEA School of Law, who conducted the research with Professor Liz Campbell of Monash University, said: “”These FRT trials have been operating in a legal vacuum.

“There is currently no legal framework specifically regulating the police use of FRT [facial recognition technology].”

Parliament should set out rules governing the scope of the power of the police to deploy FRT surveillance

Academic Doctor Joe Purshouse, who has published research on FRT

He continued: “Parliament should set out rules governing the scope of the power of the police to deploy FRT surveillance in public spaces to ensure consistency across police forces.

“As it currently stands, police forces trialing FRT are left to come up with divergent, and sometimes troubling, policies and practices for the execution of their FRT operations.”

Watch lists need tighter regulation

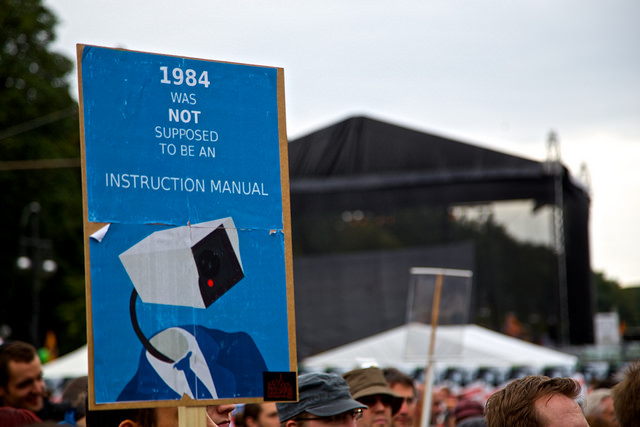

Surveillance vans are placed in key parts of central London. Image credit: Big Brother Watch

Police forces are able to draw images from the social media accounts of persons of interest for mapping, though these individuals needn’t have new or severe convictions. In some cases, images remain on the system of people retained by police who didn’t go on to be arrested.

Unreliable technology?

“There appears to be a credible risk that FRT technology will undermine the legitimacy of the police in the eyes of already over-policed groups,” said Purshouse.

Despite police forces claiming the technology has been an early success, Purshouse and Campbell claim there is no clear way of calculating that success. Furthermore, they claim the technology is inaccurate, disproportionately including certain individuals on watch lists.

Their research claims women and ethnic minorities are also disproportionately affected by being misidentified, displaying a further remit of complications.

Other vocal opponents

"It's turning members of the public into walking ID cards."

Director of Big Brother Watch @silkiecarlo says it's "outrageous" that police are set to test live facial recognition technology in London.

Head here to read more on this trial: https://t.co/oxOGPz9GKm pic.twitter.com/85TjXwpfnU

— Sky News (@SkyNews) December 15, 2018

The technology, capable of scanning 18,000 faces per minute, has already received widespread criticism from human rights groups and campaigners.

Hannah Couchman, Policy and Campaigns Officer at Liberty, said: “The police have tried to suggest the rollout of this invasive technology is an open and transparent trial. We have witnessed the opposite. The use of unmarked vans and false assertions in the limited public statements available shows the police have no intention of gaining the public’s consent.”

Authoritarian, dangerous and lawless

Civil liberties and privacy organisation, Big Brother Watch

Civil liberties and privacy organisation Big Brother Watch have also described the Metropolitan Police’s use of mass facial recognition surveillance technology – capable of scanning 300 faces per second – as “authoritarian, dangerous and lawless”.

Campaigners stress that mass surveillance of innocent people in public violates three articles of the Human Rights Convention, Article 10: The Right to Freedom of Expression; Article 11: The Right to Freedom of Assembly and Association; and Article 8: The Right to a Private Life.